AWS Databricks Integration Setup

Complete guide for integrating AWS Databricks billing data with the nOps platform.

Prerequisites

- AWS Account: Administrative access to deploy CloudFormation stacks

- S3 Bucket: Existing bucket or ability to create one for billing data storage

- AWS Databricks Workspace: Administrative access to create and schedule jobs

- IAM Permissions: Ability to create and manage IAM roles and policies

Video Tutorial

How It Works

The AWS Databricks integration follows this process:

- S3 Bucket Setup - Configure S3 bucket for billing data storage

- CloudFormation Deployment - Deploy nOps-provided stack for secure access permissions

- Databricks Job Creation - Schedule daily job to export billing data to S3

- Automated Data Collection - nOps retrieves and processes data for Explorer

note

Ensure you're logged into the correct AWS account throughout the setup process.

Setup Instructions

Step 1: Access nOps Integrations

- Navigate to Organization Settings → Integrations → Inform

- Select Databricks from the available integrations

Step 2: Configure S3 Bucket

- Select AWS Account from the dropdown

- Enter Bucket Details:

- Bucket Name: Your S3 bucket name

- Prefix: Unique prefix (e.g.,

nops/) for file organization

- Click Setup to save configuration

important

If you don't have an S3 bucket configured for Databricks, follow the S3 Bucket Setup Guide first.

Step 3: Deploy CloudFormation Stack

- You'll be redirected to AWS CloudFormation with pre-filled parameters

- Check the acknowledgment box for IAM resource creation

- Click Create Stack

note

Ensure the stack deploys successfully - this is crucial for nOps data access.

Step 4: Create Databricks Export Job

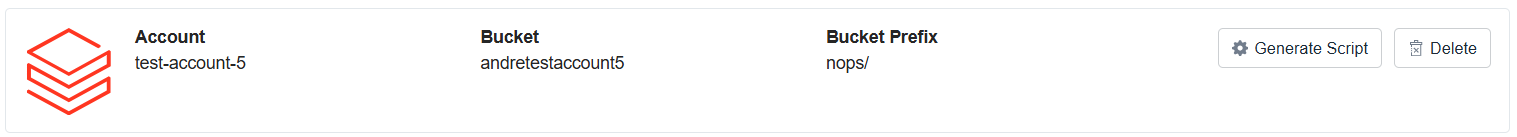

After successful CloudFormation deployment, you'll see the integration overview:

- Generate Script:

- Click Generate Script button

- Copy the script to your clipboard

-

Create Databricks Notebook:

- Log in to your Databricks workspace

- Navigate to Workspace → Create → Notebook

- Name it (e.g.,

NopsDatabricksBillingDataUploader) - Paste the copied script

-

Schedule the Job:

- Click Schedule in the notebook toolbar

- Configure the schedule settings:

- Set frequency to Every 1 day

- Select appropriate compute cluster

- Click More options → +Add

- Enter

role_arnas key and the role ARN as value

- Click Create to finalize the schedule

-

Configure Job Parameters:

- In the Parameters section, add the role ARN parameter:

- Enter

role_arnin the key field and the actual role ARN in the value field

Next Steps

- Monitor Integration: Data will appear in Explorer within 24 hours

- Optimize Usage: Use nOps Explorer tools to identify optimization opportunities

- Set Alerts: Configure cost alerts and notifications for your Databricks usage

For general questions about Databricks integrations, see the main Databricks Exports page.